How the Race for Compute is Staged to Upend the Systematic Trading World as We Know It

Why Advanced Computing Power Is Becoming the Ultimate Edge in Quant Finance

Research contributions:

Abhinav Choudhry (Researcher), Theodore Morapedi (Founder & CEO)

The Historical Arc: How We Got Here

Few industries have been reshaped by technology as radically as the financial markets, where the rise of hardware and software systems has transformed every step of the trading lifecycle. Initially, computers were primarily used for clerical tasks and for data management and that too was mainly by exchanges. However, computation and automation was always on the horizon, led first by the New York Stock Exchange introducing the Designated Order Turnaround (DOT) system in 1976 that allowed investors to reach specialists located on the exchange floor to place orders. Trading became electronic, paving the way for trades that would execute automatically given preset conditions. Suddenly, for the first time, computers could be used not just for best price execution but to plan and execute trades systematically based on a set of pre-agreed rules. The first generation algorithmic trading firms emerged not long after in the form of Renaissance Technologies, DE Shaw, Citadel and others.

(*) Did You Know?

Alan Kay, a computer designer, created AutEx, the world’s first system of automated trading in 1968, available as a subscription service through an AutEx console1.

The Emergence of Compute

The use of computers was initially more for data management and transmission purposes but this changed with the emergence of algorithmic trading. Companies like Citadel were using the computers to find patterns in price information of securities that could be revealed for predictability and did this both in terms of high frequency trading (HFT) and medium frequency trading (MFT). At the same time, its market making operations were solving the problem of creating the most efficient order matching engines in the world. Exchange volumes went up exponentially after the advent of electronic trading, ushering in a wave of new participants such as Tower Research Capital, Jane Street, Jump Trading, and HRT. Computers offered enormous opportunities for profit, but they were an emerging technology and required continual upgrades and maintenance.

(*) Did You Know?

NYSE’s DOT was replaced by SuperDot that continued to be used with upgrades till as late as 2009.

Drivers of the Compute Arms Race

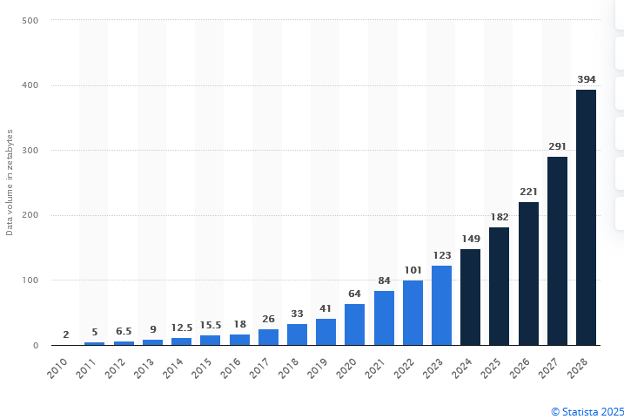

Fundamentally, the nature of the factors that drive markets do not change with time but there is a continuous churn in the details. So, while monetary supply data is not altogether more complex now than in the 1960s, consumer data is aeons more complex today. In a world where almost everyone has a digital footprint and many in the digitally connected world have multiple devices generating data, the amount of data is on a vertical growth spurt. Consider that the data volume went up over 60 times between 2010 and 2023 from 2 zettabytes to 123 zettabytes and is projected to become almost 400 zettabytes by 2028; one zettabyte is a trillion gigabytes.

For systematic traders, this explosion of data does not represent simply more noise—it is the bedrock for signal extraction, predictive models, and automated execution. More data means more potential alpha, but this is only available if you have the compute power to harness it.

AI/ML

AI has been evolving over the years to become better at making sense of large unstructured datasets. Many different machine learning approaches are employed but increasingly, we are seeing the usage of deep learning approaches and now the wholesale use of generative AI. Not all trading problems require deep learning or generative AI but increasingly, firms feel the pressure to use larger models. The compute power required for massive datasets and AI models (e.g., GPT-4-sized models) in signal discovery is immense. In the HFT world, it is crucial to test alphas quickly in a realistic simulated environment since it conveys a big edge — firms with greater compute could finish a simulation in seconds and rapid feedback can substantially accelerate the iterative process of finding alpha.

Data Quality

Data quality is arguably as important as compute. Bloomberg trained the domain specific 50 billion parameter BloombergGPT for millions of dollars. 51.27% of the data, a whopping 363 billion tokens, came from Bloomberg’s financial datasets with the rest coming from public datasets2. Having proprietary domain-specific data is incredibly powerful. Higher quality data might lead to superior performance given the same compute. However, for firms that are processing more of the same data, and using AI to sift through data in real-time, there is really no substitute for using more compute to gain an alpha. The type of data being processed is changing fast and professionals in hedge funds are increasingly crafting models from alternative data. The patterns in such data are not obvious at all and today gargantuan amounts of data need to be processed in all sorts of ways to glean reliable trading signals. Processing satellite images, doing sentiment analysis of social media, analysing web search trends, and processing high-resolution financial price/volume data: all demand compute scalability.

Market Fragmentation

As markets become increasingly fragmented across multiple exchanges, dark pools, and alternative trading venues, the demand for computational power has surged:

High-speed execution algorithms, such as Smart Order Routing (SOR), must process millions of calculations in real time to identify optimal trade placement and execute orders before signals decay.

In crypto markets, fragmentation is even more pronounced, with liquidity spread across centralized and decentralized exchanges. This structural complexity means that firms engaged in HFT and MFT have no choice but to invest in advanced compute infrastructure to maintain execution efficiency and price discovery.

Firms deploying SOR (Smart Order Routing) must account for latency differentials across venues—milliseconds matter when liquidity is scattered.

(*) Did you know?

In 2023, the New York Stock Exchange processed over 350 billion messages per day, a 700% increase from a decade ago - highlighting the increasing computational demands faced by trading infrastructures.

Barriers to Entry

The game of compute is an expensive one. It requires data centres that may span fields and dedicated electricity infrastructure. In very recent news, Alex Gerko’s algorithmic trading firm XTX is planning to build five data centres at a cost of €1 Billion. Having one’s own data centre conveys an enormous advantage over using rent-based models over the long run and given the scaling of compute, there is scarcely available capacity. A smaller firm with limited resources cannot compete effectively in HFT and MFT with larger firms possessing such infrastructure. Compute investments take time to pay off and few firms have the available capital and time to take part in this arm’s race.

The real question isn’t just who wins—rather who even gets to compete. Without significant capital investment in compute, smaller firms face existential risks of being locked out of entire market segments.

On the other hand, firms relying on cloud services face their own inherent risk of cloud dependency — outages, latency bottlenecks, or worse, cyberattacks. For strategies built on real-time signal research, even minor disruptions can be catastrophic.

It’s becoming increasingly clear that the makeup of firms controlling global securities markets is shifting. At the centre of this structural change lies computational scale—and those with the means to build it.

(*) Did You Know?

A 1-millisecond latency difference could cost systematic traders hundreds of millions per year in profits, which is why firms invest heavily in fiber optics and co-location near exchanges.

Winners and Losers

Overall, the race for compute implies that well-capitalised firms, such as Citadel or Two Sigma that are able to invest in compute infrastructure will dominate while smaller firms that can’t compete on compute or do not have alternative data ingestion will plausibly be outcompeted. We might witness ever greater concentration of trades in the larger firms. Smaller firms might have to concern themselves more with low frequency trading where the compute latency advantage is significantly lesser.

(*) Did You Know?

Training a single large-scale AI model can consume as much energy as 10,000 households annually—an under-explored issue for financial firms chasing alpha.

Future Trends and Predictions

Looking ahead, the trajectory is clear – AI-driven quant strategies will become more complex and compute-hungry, driving unprecedented demand for computational power. Medium-frequency trading, which once might have run on a single server with optimized code, may soon involve clusters of AI models running on distributed GPU networks, all coordinated to generate and execute trades. Industry insiders predict a few trends by 2030:

Quant firms will routinely train models on billions of data points, including text, image, video and audio data, to extract trading signals. This will require access to what today would be considered supercomputers. By 2027 it’s likely that major hedge funds will either build their own HPC data centers (as XTX is doing) or lease capacity from cloud providers equivalent to the tune of several thousand top-end GPUs at a time. The scale of compute used in finance could start to rival tech companies – indeed, one Chinese AI model (DeepSeek) was rumoured to be using tens of thousands of high-end chips in secret3 . While not every fund will go that far, the bar is rising fast.

Custom AI hardware and acceleration will become commonplace. To gain millisecond advantages, some HFT firms are already exploring specialized chips (like FPGAs and ASICs) for running trained neural networks ultra-fast at the exchange co-locations. Over the next few years, we may see AI inference engines collocated with trading servers to execute AI-driven strategies with minimal latency. At the research end, firms might employ next-generation AI accelerators (such as Google’s TPUs or upcoming neuromorphic chips) to speed up model training. The bottom line is an arms race not just in algorithms, but in the machines that run them.

Integration of AI into all parts of the trading pipeline: from idea generation to backtesting to execution and risk management. This holistic adoption will multiply compute needs. Imagine an MFT strategy development workflow in 2030: a research AI combs through petabytes of data to suggest new strategy ideas; those ideas are automatically backtested on decades of high-resolution data (via cloud HPC); an execution AI then simulates market impact and optimizes how to trade the strategy; finally, a monitoring AI watches live trades and manages risk in real-time. Each of those steps demands significant computational heft and likely distinct AI models. Firms embracing this fully will need to scale their infrastructure accordingly, perhaps by an order of magnitude relative to today and will be among the most profitable firms per employee of the future.

Collaboration with tech firms and cloud providers: As the demand for computing power and AI talent grows, we’ll see more partnerships like Citadel Securities working with Google Cloud, or NASDAQ partnering with AI startups for market surveillance. The wall between Silicon Valley and Wall Street is thinning, with hedge funds hiring from big tech, and big tech offering specialized financial AI services. This could make cutting-edge AI more accessible to mid-sized funds (through cloud-based AI platforms), further accelerating the trend. However, the top players will likely continue pouring capital into proprietary infrastructure to maintain a competitive edge in speed and secrecy.

Quantum Computing: A New Front in the Compute Arms Race

Quantum computing is moving from theory to application, with major financial institutions investing heavily in research and trials. JPMorgan, Goldman Sachs, and HSBC are among those testing quantum algorithms for portfolio optimization, derivatives pricing, and risk modeling. HSBC has also piloted quantum-resistant encryption for tokenized assets, anticipating future cybersecurity risks.

Leading hedge funds like Renaissance, DE Shaw, and Two Sigma are quietly exploring quantum computing to gain an edge in portfolio optimization, risk analysis, and pricing models. DE Shaw has already invested in QC Ware, betting that quantum machines will eventually outperform classical supercomputers in solving multi-dimensional financial problems. Early tests back in 2015 showed a 1,000-qubit quantum processor performing 100 million times faster than a conventional core, reinforcing quantum’s potential in complex financial modeling. However, skeptics argue that modern chips and parallel computing remain sufficient for today’s systematic trading needs. Despite this, top quant funds are hedging their bets—should quantum computing mature faster than expected, the first adopters will hold a decisive advantage4.

One concrete example of quantum advantage in finance comes from a collaboration between Goldman Sachs and QC Ware, where researchers developed a quantum Monte Carlo algorithm capable of accelerating risk simulations. Their findings suggest that tasks traditionally taking several hours on classical systems could be completed in just minutes using quantum methods — a leap that, if scalable, could redefine portfolio optimization and scenario analysis at large trading firms.

Google’s Willow quantum processor, the successor to Sycamore, has significantly advanced quantum computing capabilities, improving coherence times and scalability. These gains build on Sycamore’s earlier milestone — performing calculations in 200 seconds that would take a classical supercomputer an estimated 10,000 years. With Willow, the gap between classical and quantum computation is narrowing faster than many expected. For financial firms, this signals not only faster simulation and optimization capabilities but a fundamental shift in the scale and structure of financial modeling workflows.

Investment in the sector is growing rapidly. Global spending on quantum technology is projected to rise from $80 million in 2022 to nearly $19 billion by 2032, with financial services and quant traders specifically among the leading adopters. However, quantum processors are not expected to replace traditional computing but rather complement existing high-performance computing (HPC) infrastructure. As firms build hybrid architectures integrating classical and quantum systems, the demand for computational power is likely to increase rather than decline.

Bonus: DeepSeek: Is Compute Overrated?

What is DeepSeek?

On January 27th 2025, news broke about DeepSeek AI caused Nvidia to drop 17% in one day, a nearly $600 billion erosion in market capitalisation, the largest ever single day drop for a company. The key reason is that the DeepSeek LLM claims to have outperformed foundation models such as ChatGPT in many aspects despite costing only one twentieth to train and this implies that much lesser compute might be required for AI than previously estimated. The biggest whammy though is that DeepSeek was built by no multi-trillion dollar enterprise but a comparatively small company in China and it has made everything including its code open-source! All the existing large foundation models are proprietary and costly. DeepSeek can be used for free and its weights can be modified at will. This will level the playing field for small firms for now, at least for large language models (LLMs).

Actual cost might be understated:

It must be clarified that DeepSeek’s stated training cost of $6 million only includes resource rent costs and the actual training run costs but actual costs of AI development also include personnel costs as well as costs of pilot training runs and so it’s actual cost of development might be higher.

Cost structures might require a rethink:

If compute is cheaper, that is actually better for overall profitability but DeepSeek is a rude awakening for any firms that are getting complacent simply because of how much compute they possess. Only in a game of comparable efficiencies will the greatest compute win, and right now it appears that there are still major efficiency differences between implementations. CFOs and CTOs may need to reexamine their cost structure significantly instead of pressing ahead on compute alone. Nevertheless, the lessons of DeepSeek apply more to LLMs, which are just one type of AI architecture that are being used in statistical trading. In the highly competitive world of trading, there are many AI algorithms and the fight for lower latency and higher quality signals will keep demanding compute.

Jevon’s paradox:

DeepSeek doubtless has mammoth implications for firms making budget allocations but it does not imply that compute itself will become any less relevant. The bigger takeaway is actually what Satya Nadella posted on Twitter immediately after, “Jevons paradox strikes again! As AI gets more efficient and accessible, we will see its use skyrocket, turning it into a commodity we just can't get enough of.” More efficient compute is going to make AI accessible to smaller firms and even they might be able to deploy their custom AI models.

Compute will remain king

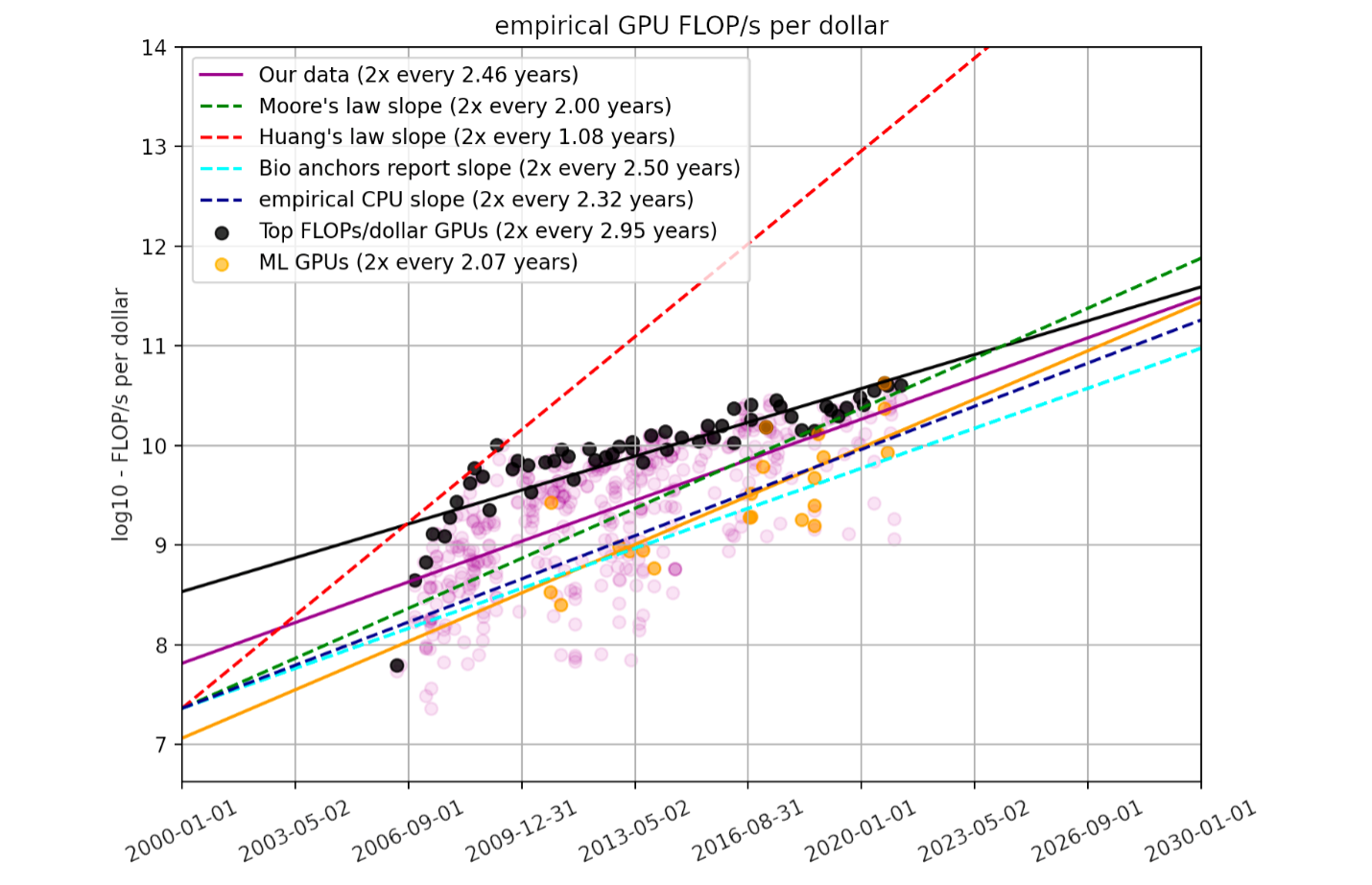

Larger organisations with more resources will nevertheless gain an edge by throwing more compute at the problem because so far, despite exponential increases in compute thrown at AI, performance improvements continue to persist: it really does appear that more compute is better. We can also observe from the chart below that compute has been becoming more efficient over time on its own and the number of floating point operations per second (FLOP/s) doubles per dollar every 2.5 years (Hobbhahn, 2022)5.

The computing power required for processing such big data is immense and also keeps on scaling. We might have moved from a world in which the sizes of computers have moved from square feet to square centimetres, but ironically the overall square footage occupied by computers is only going up. As volume of data expands and AI takes over more operations, compute will remain king6.

Conclusion

Firms with a strong tech DNA and willingness to spend on cutting-edge computing are poised to dominate. Top tier quant trading outfits like XTX, Jump Trading, Citadel Securities, and Jane Street have the low-latency hardware and are now augmenting their stack with modern AI architecture (for example, using deep learning to optimize execution and forecasting performance). Large quant hedge funds like Two Sigma, D.E. Shaw, Citadel, and Renaissance have the scale – both in capital and computing clusters – to train enormous models and crunch petabytes of data. And forward-looking multi manager multi-strat funds and asset managers are actively recruiting AI PhDs and building HPC labs to ensure they don’t fall behind. This combination of AI expertise and supercharged infrastructure will be the key differentiator in the next 5–10 years of quantitative investing.

If your firm isn’t preparing for this shift, the window to act is closing!

Onyx Alpha Partners will closely be watching the developments in the industry as this race intensifies for compute as well as the AI talent that would help achieve these breakthroughs. We are partnering with elite firms we consider to be the early winners in this race for talent, silicon and code, so get in touch to learn about the impactful work we are doing in this space!

If your firm is planning its next investment in compute infrastructure or quant talent, let’s discuss how Onyx Alpha Partners can support your growth.

Instantly Book a 30 min consultation using the link below:

https://calendly.com/tmorapedi/one-on-one-chat-with-theo

SOCIAL MEDIA & CONTACT INFORMATION

Email: info@onyxalpha.io

WhatsApp Business: +44 7537 141 166

UK Business Line: +44 7537 188 885

Reference List:

(2024). Utexas.edu. https://www.laits.utexas.edu/~anorman/long.extra/Projects.F97/Stock_ Tech/initial.htm

Wu, S., Irsoy, O., Lu, S., Dabravolski, V., Dredze, M., Gehrmann, S., ... & Mann, G. (2023). Bloomberggpt: A large language model for finance. arXiv preprint arXiv:2303.17564.

https://www.reuters.com/technology/artificial-intelligence/high-flyer-ai-quant-fund-behind-chinas-deepseek-2025-01-29/#:~:text=However%2C%20some%20tech%20executives%20have,computing%20power%20at%20its%20disposal

Hobbhahn, Marius. (2022). Trends in GPU Price-Performance. Epoch AI. https://epoch.ai/blog/trends-in-gpu-price-performance

https://www.delltechnologies.com/asset/en-in/products/ready-solutions/industry-market/hpc-ai-algorithmic-trading-guide.pdf#:~:text=such%20as%20capital%20computations%2C%20credit,more%20demand%20for%20scalable%20computation

Disclaimer:

This white paper has been prepared by Onyx Alpha Partners for informational and analytical purposes only. It is based on a combination of anonymized interviews with industry professionals, internal proprietary research, and publicly available data. While every effort has been made to ensure accuracy, completeness, and relevance, the views and insights presented herein reflect prevailing conditions as of May 2025 and are subject to change without notice.

This document does not constitute investment advice, solicitation, or an offer to buy or sell any securities or financial instruments. Any opinions expressed are those of Onyx Alpha Partners and should not be relied upon as a substitute for independent judgment or consultation with qualified professionals.

Onyx Alpha Partners disclaims all liability for any direct or indirect loss arising from the use of this report or its contents. Recipients are advised to use this material at their own discretion and to verify any information before relying on it.